As the field of artificial intelligence (AI) continues to expand at an unprecedented rate, the demand for efficient, scalable, and cost-effective models has become a top priority for organizations worldwide. Large-scale AI models like GPT-4, which reportedly cost an estimated $78 million to train, showcase the immense computational resources required for such endeavors. The financial and environmental costs of developing these models have led to significant concerns about their scalability, particularly for smaller enterprises. In fact, the energy consumption of training models like GPT-3 has been compared to powering an American household for decades.

Generative AI and other AI technologies have the potential to add trillions of dollars to the global economy, with estimates suggesting that AI could contribute between $2.6 trillion and $4.4 trillion annually across various industries. However, much of this economic benefit hinges on finding ways to make these technologies more accessible and cost-efficient. This is where LoRA (Low-Rank Adaptation) fine-tuning emerges as a game-changing solution, offering businesses a way to deploy sophisticated AI models without incurring prohibitive costs.

By addressing the challenges of large-scale AI model deployment, LoRA fine-tuning provides a path toward AI scalability, enabling organizations to balance performance with cost-efficiency while contributing to the rapidly evolving AI landscape.

Let’s begin with understanding what LoRA is.

What is LoRA Fine-Tuning?

LoRA, which stands for Low-Rank Adaptation, is a technique designed to fine-tune large language models efficiently. Traditional fine-tuning methods often require updating all of the model parameters, a process that is both time-consuming and resource-intensive. LoRA sidesteps this by introducing a set of small, trainable matrices that adapt the model to new tasks without the need to retrain the entire network. By decomposing the weight updates into low-rank matrices, LoRA reduces the number of parameters that need to be trained. This approach not only accelerates the fine-tuning process but also significantly lowers the computational resources required, making it a highly scalable solution for various applications.

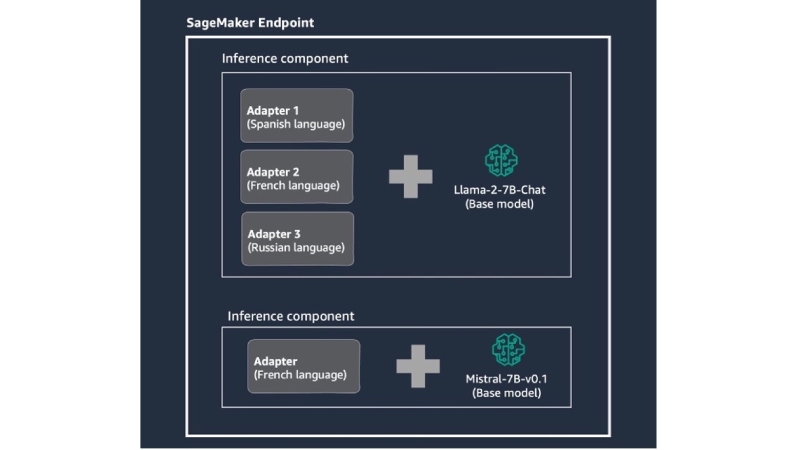

Further, I spoke to a leading expert in the field – Vivek Gangasani, who works as an AI Specialist at AWS. At the ML Conference in Munich in June 2024, Vivek Gangasani discussed the practical applications of LoRA adapters, focusing on their ability to optimize resource utilization in cloud environments while minimizing operational costs. He explained how LoRA can be effectively deployed to streamline model performance without the need for extensive computational resources. Working alongside his colleagues, he explored various frameworks that support multi-tenant LoRA serving, a technique that allows multiple models to be adapted and deployed simultaneously. Their findings, shared on the organization’s blog, offered insights into how businesses can efficiently manage LoRA-adapted models, making the most of available resources while keeping expenses manageable.

Why is LoRA Important?

LoRA’s significance lies in its ability to enhance large language models (LLMs) without the heavy computational and financial burden typically associated with traditional fine-tuning. LoRA enables updates to only a small fraction of a model’s parameters, significantly reducing the resources needed for fine-tuning and enabling faster deployment.

Vivek Gangasani explains: “By focusing on just a few low-rank matrices, LoRA allows us to fine-tune large models without losing the original model’s knowledge. This approach not only reduces the time and cost involved but also opens the door for more businesses to leverage advanced AI.”

This makes LoRA particularly relevant in sectors like healthcare and finance. In healthcare, LoRA-enhanced models help professionals sift through vast medical datasets, improving diagnostic accuracy and patient care. In finance, LoRA models are transforming customer service and market trend analysis by making data processing faster and more efficient.

Scalability and Customization

LoRA excels in scalability, allowing businesses to adapt one base model to multiple applications without retraining it entirely. This is especially valuable for companies needing to maintain several versions of a model, each tailored to specific regulatory requirements or customer needs.

Vivek emphasizes: “With LoRA, we can implement multi-tenant setups, serving thousands of fine-tuned models on a single infrastructure. This approach not only optimizes resource usage but also reduces overhead, making the process much more cost-efficient.” This scalability is being utilized in diverse industries such as education, where LoRA-enhanced models help tailor content to individual learning styles, making education more personalized.

Cost-Effective Deployments

One of the key advantages of LoRA is its ability to democratize AI. Traditional fine-tuning requires expensive infrastructure, but LoRA’s efficient design reduces hardware needs, making it possible for smaller organizations to participate in AI development.

In cloud environments like AWS SageMaker, companies can deploy hundreds of LoRA-adapted models at a fraction of the cost. This flexibility has proven essential in fields such as retail, where personalized shopping experiences rely on models that can process customer data in real-time.

The Future of LoRA Fine-Tuning

Researchers and industry professionals are actively exploring methods to reduce the computational demands of AI models without compromising performance. One promising direction is the integration of LoRA with other compression techniques such as quantization and pruning. By combining these methods, models can be further optimized, enhancing efficiency while maintaining accuracy.

Another exciting development is adapting LoRA fine-tuned models for deployment on edge devices. This enables AI applications in IoT and mobile platforms that have limited computational resources, bringing sophisticated AI capabilities closer to end-users. By deploying models on edge devices, organizations can provide faster, more efficient services without relying heavily on centralized computing power.

Real-World Applications

LoRA fine-tuning is already making a substantial impact across various industries. In healthcare, fine-tuned models assist in diagnostics by analyzing medical records and imaging data with greater accuracy and speed, leading to improved patient outcomes. These models help medical professionals make more informed decisions, enhancing the overall quality of care.

Financial institutions are utilizing LoRA-adapted models for fraud detection and risk assessment. Banks can process vast amounts of data efficiently, identifying fraudulent activities more quickly and accurately. This not only safeguards assets but also enhances customer trust and compliance with regulatory standards.

In the retail sector, personalized shopping experiences are being elevated through models that understand customer preferences. By fine-tuning AI models on consumer data, retailers can drive sales and increase customer satisfaction with tailored recommendations and promotions. This personalized approach fosters customer loyalty and boosts revenue.

Conclusion

LoRA fine-tuning has emerged as a transformative technique, enabling businesses to leverage the power of large models without incurring high costs or resource demands. As Vivek puts it: “LoRA offers a smarter, more scalable approach to AI, making it accessible to organizations of all sizes. It’s not just about fine-tuning models efficiently; it’s about making AI technology more adaptable and practical for real-world applications.”

As AI continues to evolve, LoRA’s role in shaping the future of customizable and scalable AI solutions will likely expand, revolutionizing industries from healthcare to content creation.

- Venkata Nedunoori: A Trailblazer in Secure Cloud Solutions, AI Innovation, and Cybersecurity Leadership - October 26, 2024

- Can LoRA Fine-Tuning Revolutionize AI Scalability and Cost Efficiency? - October 11, 2024

- Can This New Smart Onboarding Solution Revolutionize Guest Network Security in Corporate Environments? - September 30, 2024